Dario Amodei: Balancing AI Innovation and Safety

By the time you read this—or any article about artificial intelligence (AI)—it’ll be out of date. AI is advancing that rapidly. And Dario Amodei is working to keep pace.

“It’s on an exponential curve, and we’re on the steep part of that curve right now,” says Amodei, 2007 Hertz Fellow and CEO and co-founder of Anthropic, an AI safety and research lab. Amodei believes that as AI grows, so do the risks. He admits he’s not certain. He hopes he’s wrong. And this is exactly why the AI space needs him.

Amodei’s career has grown exponentially too. Awarded a Hertz Fellowship in 2007, he won the Hertz Thesis Prize in 2011 for his doctoral thesis on neural circuits. Later, he worked for Baidu, the Google Brain team, and OpenAI. Before co-founding Anthropic, Amodei led the efforts to build GPT-2 and GPT-3 at OpenAI. He then went on to build “Claude,” Anthropic’s large language models (LLMs).

Amodei acknowledges the influence of his Hertz Fellowship. “At every point in my career, it helped open doors that weren’t there before or exposed me to ideas that I wouldn’t have seen before.”

At Anthropic, Amodei is known as an honest and authentic leader, and intentionally off the radar. “If people think of me as boring and low profile, this is actually kind of what I want,” says Amodei. “Because I want to defend my ability to think about things intellectually in a way that’s different from other people and isn’t tinged by the approval of other people.” Amodei knows what’s at stake in the AI race— and that it’s much bigger than one person.

Putting Humans at the Center

Amodei is one of seven founders of Anthropic, including his sibling Daniela Amodei and 2005 Hertz Fellow Jared Kaplan, all of whom left OpenAI over concerns about the company’s direction and AI safety. The name Anthropic is a nod to humanity, and a reminder of their mission to ensure that transformative AI helps people and society flourish.

Anthropic sees itself as just one piece of this evolving puzzle, which is why the company collaborates with civil society, government, academia, nonprofits, and industry to promote safety across the field.

Unlike most AI companies, Anthropic is a safety-first company from the ground up. But other companies are starting to take note. Recently, at least 20 companies joined Anthropic in support of a Tech Accord to Combat Deceptive Use of AI in 2024 Elections. And the AI Safety Institute, formed in November 2023, recently announced collaborations with Anthropic and, perhaps surprisingly, OpenAI, which is also restructuring into a public benefit corporation, like Anthropic.

Amodei welcomes all of it. “Our existence in the ecosystem hopefully causes other organizations to become more like us,” he says. “That’s our general aim in the world and part of our theory of change.”

Balancing Bigger with Better

Anthropic seeks to not just create incrementally better models, but models that interact with people more meaningfully. That’s why they’re committed to aligning with human values and working to ensure positive societal impacts. To do so, Anthropic co-founder Chris Olah pioneered a new scientific field known as mechanistic interpretability, seeking a deeper understanding of the inner workings of AI.

Anthropic was also the first company to use what’s called Constitutional AI, where its LLMs are given a set of principles to discourage and mitigate broadly harmful outputs. And it was the first to establish a form of voluntary self-regulation called a Responsible Scaling Policy, a series of technical and organizational protocols to help the company manage the risks faced when developing increasingly capable AI systems. These protocols include hands-on, all-in “red teaming” or adversarial testing.

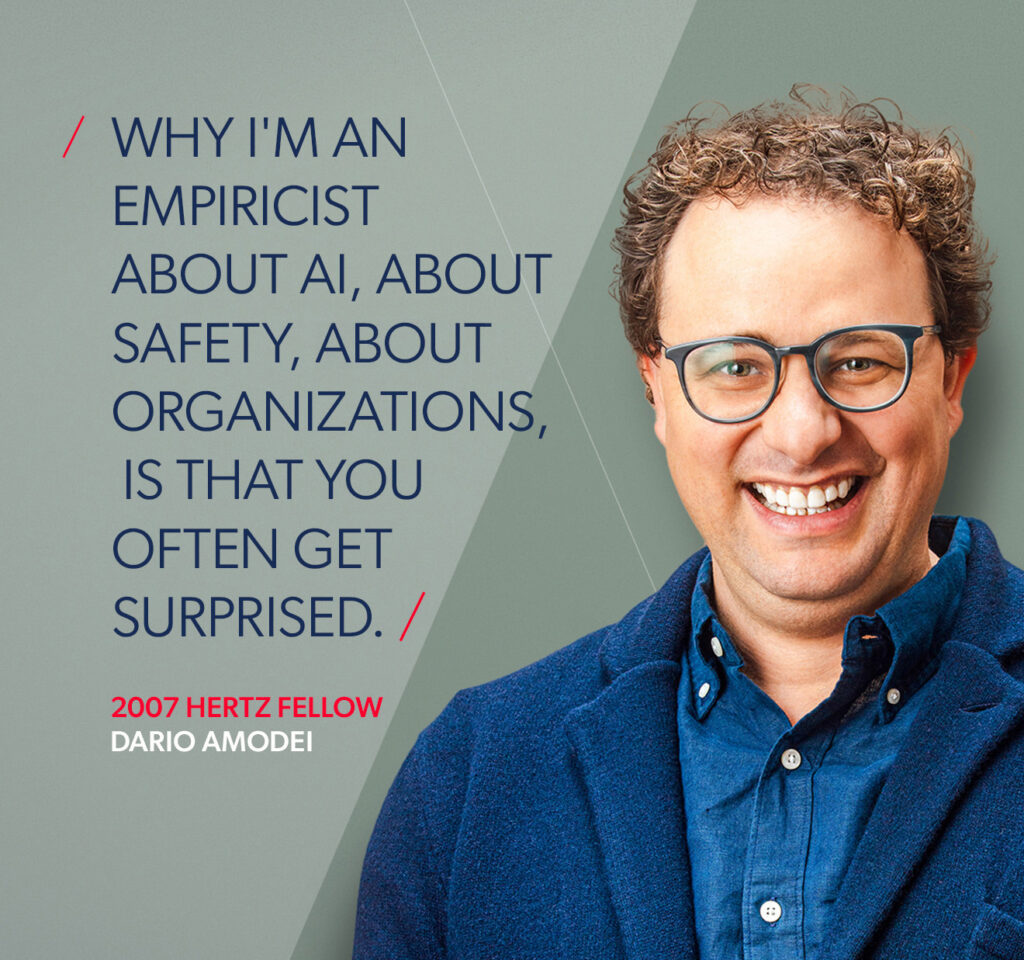

“Why I’m an empiricist about AI, about safety, about organizations, is that you often get surprised,” says Amodei.

Through their research, Anthropic endeavors to build reliable, interpretable, steerable AI systems. Through policy, they work to enforce acceptable use. But they are realistic about the challenges.

Expect to be Surprised

“We expect that 2024 will see surprising uses of AI systems,“ reads frank language on the Anthropic website, “uses that were not anticipated by their own developers.”

Many challenges are already in front of us, including algorithm bias, privacy concerns, and the manipulation of public opinion through misinformation—something Anthropic is deeply concerned about, given the number of high-profile elections taking place around the world this year. It’s not hyperbolic to say national security and geopolitical stability are at stake.

Because so much is unknown, the public and press are left to speculate wildly about everything from utopian hype to dystopian dread. What happens when AI is as smart as humans? What happens when it’s smarter?

Yet, there are reasons to remain optimistic. One of those reasons is that Amodei and his colleagues are on the case—working to ensure that AI has a positive impact on society as it becomes increasingly advanced and capable, and inspiring the industry to join them. And in the meantime, the positive use cases for AI are mounting, from disease detection and wildfire detection to a cure for cancer and maybe even a cure for conspiracy theories.

By mitigating the potential for great harm, Amodei hopes we’ll realize the promise of greater good.